Dr. rer. nat. Jonathan Klein

I am a research scientist in computer science / visual computing.

My current research focus lies on the intersection between computer graphics and simulation methods and their applications in machine learning.

Prior, I did my Phd focused on non-line-of-sight imaging, a novel remote sensing modality that reveals objects hidden from the direct line of sight of a camera.

Academic Career

Research Scientist (since 2023)

working with Prof. Dr. Dominik L. Michels at the Visual Computing Center (VCC) / Computational Sciences Group

working with Prof. Dr. Dominik L. Michels at the Visual Computing Center (VCC) / Computational Sciences Group

Post Doc (started 2022)

working with Prof. Dr. Dominik L. Michels at the Visual Computing Center (VCC) / Computational Sciences Group

working with Prof. Dr. Dominik L. Michels at the Visual Computing Center (VCC) / Computational Sciences Group

PhD (finished 2021)

supervised by Prof. Dr. Matthias B. Hullin at the Institute for Computer Science II / Computer Graphics, University of Bonn

Computational Non-Line-of-Sight Imaging

supervised by Prof. Dr. Matthias B. Hullin at the Institute for Computer Science II / Computer Graphics, University of Bonn

Master of Science (finished 2014)

Master thesis on

supervised by Prof. Dr. Andreas Kolb at the Computer Graphics and Multimedia Systems Group, University of Siegen

Master thesis on

Correction of Multipath-Effects in Time-of-Flight Range Data

supervised by Prof. Dr. Andreas Kolb at the Computer Graphics and Multimedia Systems Group, University of Siegen

Bachelor of Science (finished 2012)

Bachelor thesis on

supervised by Prof. Dr. Andreas Kolb at the Computer Graphics and Multimedia Systems Group, University of Siegen

Bachelor thesis on

Terrain Shading

supervised by Prof. Dr. Andreas Kolb at the Computer Graphics and Multimedia Systems Group, University of Siegen

Publications

My OrcID is 0000-0001-6560-0988Citations are available at my Google Scholar profile

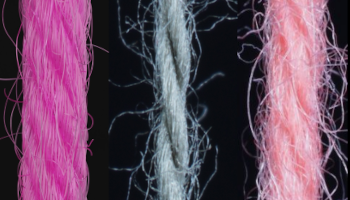

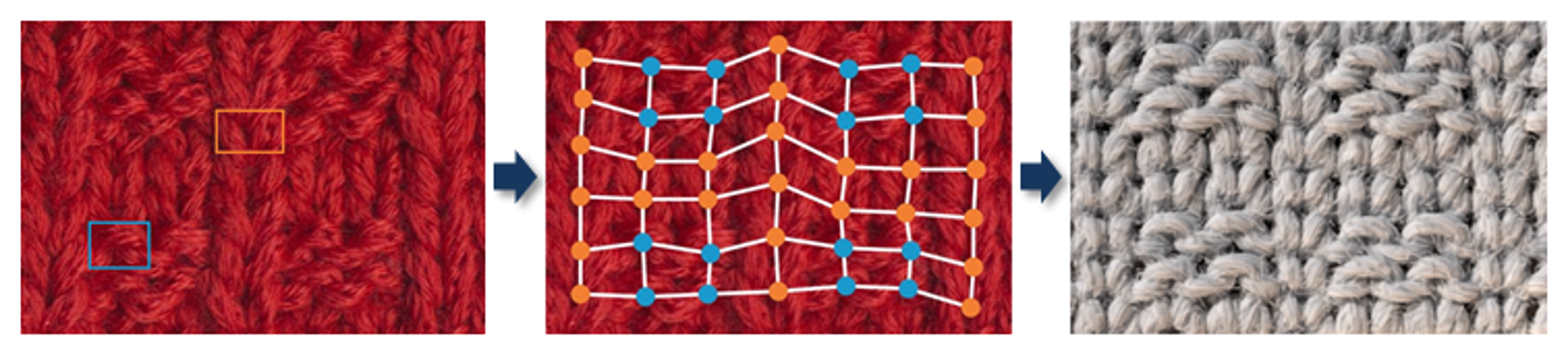

Neural Inverse Procedural Modeling of Knitting Yarns from Images

In: Computers & Graphics, 2024

In: Computers & Graphics, 2024

We investigate the capabilities of neural inverse procedural modeling to infer high-quality procedural yarn models with fiber-level details from single images of depicted yarn samples. While directly inferring all parameters of the underlying yarn model based on a single neural network may seem an intuitive choice, we show that the complexity of yarn structures in terms of twisting and migration characteristics of the involved fibers can be better encountered in terms of ensembles of networks that focus on individual characteristics. We analyze the effect of different loss functions including a parameter loss to penalize the deviation of inferred parameters to ground truth annotations, a reconstruction loss to enforce similar statistics of the image generated for the estimated parameters in comparison to training images as well as an additional regularization term to explicitly penalize deviations between latent codes of synthetic images and the average latent code of real images in the latent space of the encoder. We demonstrate that the combination of a carefully designed parametric, procedural yarn model with respective network ensembles as well as loss functions even allows robust parameter inference when solely trained on synthetic data. Since our approach relies on the availability of a yarn database with parameter annotations and we are not aware of such a respectively available dataset, we additionally provide, to the best of our knowledge, the first dataset of yarn images with annotations regarding the respective yarn parameters. For this purpose, we use a novel yarn generator that improves the realism of the produced results over previous approaches.

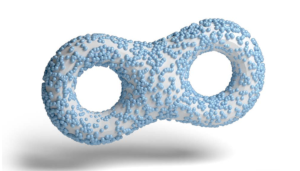

A Lennard-Jones Layer for Distribution Normalization

In: arXiv, 2024

In: arXiv, 2024

We introduce the Lennard-Jones layer (LJL) for the equalization of the density of 2D and 3D point clouds through systematically rearranging points without destroying their overall structure (distribution normalization). LJL simulates a dissipative process of repulsive and weakly attractive interactions between individual points by considering the nearest neighbor of each point at a given moment in time. This pushes the particles into a potential valley, reaching a well-defined stable configuration that approximates an equidistant sampling after the stabilization process. We apply LJLs to redistribute randomly generated point clouds into a randomized uniform distribution. Moreover, LJLs are embedded in the generation process of point cloud networks by adding them at later stages of the inference process. The improvements in 3D point cloud generation utilizing LJLs are evaluated qualitatively and quantitatively. Finally, we apply LJLs to improve the point distribution of a score-based 3D point cloud denoising network. In general, we demonstrate that LJLs are effective for distribution normalization which can be applied at negligible cost without retraining the given neural network.

Gazebo Plants: Simulating Plant-Robot Interaction with Cosserat Rods

In: arXiv, 2024

In: arXiv, 2024

Robotic harvesting has the potential to positively impact agricultural productivity, reduce costs, improve food quality, enhance sustainability, and to address labor shortage. In the rapidly advancing field of agricultural robotics, the necessity of training robots in a virtual environment has become essential. Generating training data to automatize the underlying computer vision tasks such as image segmentation, object detection and classification, also heavily relies on such virtual environments as synthetic data is often required to overcome the shortage and lack of variety of real data sets. However, physics engines commonly employed within the robotics community, such as ODE, Simbody, Bullet, and DART, primarily support motion and collision interaction of rigid bodies. This inherent limitation hinders experimentation and progress in handling non-rigid objects such as plants and crops. In this contribution, we present a plugin for the Gazebo simulation platform based on Cosserat rods to model plant motion. It enables the simulation of plants and their interaction with the environment. We demonstrate that, using our plugin, users can conduct harvesting simulations in Gazebo by simulating a robotic arm picking fruits and achieve results comparable to real-world experiments.

A Physically-inspired Approach to the Simulation of Plant Wilting

In: ACM Transactions on Graphics (SIGGRAPH), 2023

In: ACM Transactions on Graphics (SIGGRAPH), 2023

Plants are among the most complex objects to be modeled in computer graphics. While a large body of work is concerned with structural modeling and the dynamic reaction to external forces, our work focuses on the dynamic deformation caused by plant internal wilting processes. To this end, we motivate the simulation of water transport inside the plant which is a key driver of the wilting process. We then map the change of water content in individual plant parts to branch stiffness values and obtain the wilted plant shape through a position based dynamics simulation. We show, that our approach can recreate measured wilting processes and does so with a higher fidelity than approaches ignoring the internal water flow. Realistic plant wilting is not only important in a computer graphics context but can also aid the development of machine learning algorithms in agricultural applications through the generation of synthetic training data.

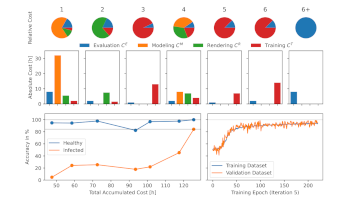

Synthetic Data at Scale: A Paradigm to Efficiently Leverage Machine Learning in Agriculture

In: Social Science Research Network, 2023

In: Social Science Research Network, 2023

The rise of artificial intelligence (AI) and in particular modern machine learning (ML) algorithms has been one of the most exciting developments in agriculture within the last decade. While undisputedly powerful, their main drawback remains the need for sufficient and diverse training data. The collection of real datasets and their annotation are the main cost drivers of ML developments, and while promising results on synthetically generated training data have been shown, their generation is not without difficulties on their own. In this contribution, we present a paradigm for the iterative cost-efficient generation of synthetic training data. Its application is demonstrated by developing a low-cost early disease detector for tomato plants (Solanum lycopersicum) using synthetic training data. In particular, a binary classifier is developed to distinguish between healthy and infected tomato plants based on photographs taken by an unmanned aerial vehicle (UAV) in a greenhouse complex. The classifier is trained by exclusively using synthetic images which are generated iteratively to obtain optimal performance. In contrast to other approaches that rely on a human assessment of similarity between real and synthetic data, we instead introduce a structured, quantitative approach. We find that our approach leads to a more cost efficient use of ML-aided computer vision tasks in agriculture.

Rhizomorph: The Coordinated Function of Shoots and Roots

In: ACM Transaction on Graphics (SIGGRAPH), 2023

In: ACM Transaction on Graphics (SIGGRAPH), 2023

Computer graphics has dedicated a considerable amount of effort to generating realistic models of trees and plants. Many existing methods leverage procedural modeling algorithms - that often consider biological findings - to generate branching structures of individual trees. While the realism of tree models generated by these algorithms steadily increases, most approaches neglect to model the root system of trees. However, the root system not only adds to the visual realism of tree models but also plays an important role in the development of trees. In this paper, we advance tree modeling in the following ways: First, we define a physically-plausible soil model to simulate resource gradients, such as water and nutrients. Second, we propose a novel developmental procedural model for tree roots that enables us to emergently develop root systems that adapt to various soil types. Third, we define long-distance signaling to coordinate the development of shoots and roots. We show that our advanced procedural model of tree development enables - for the first time - the generation of trees with their root systems.

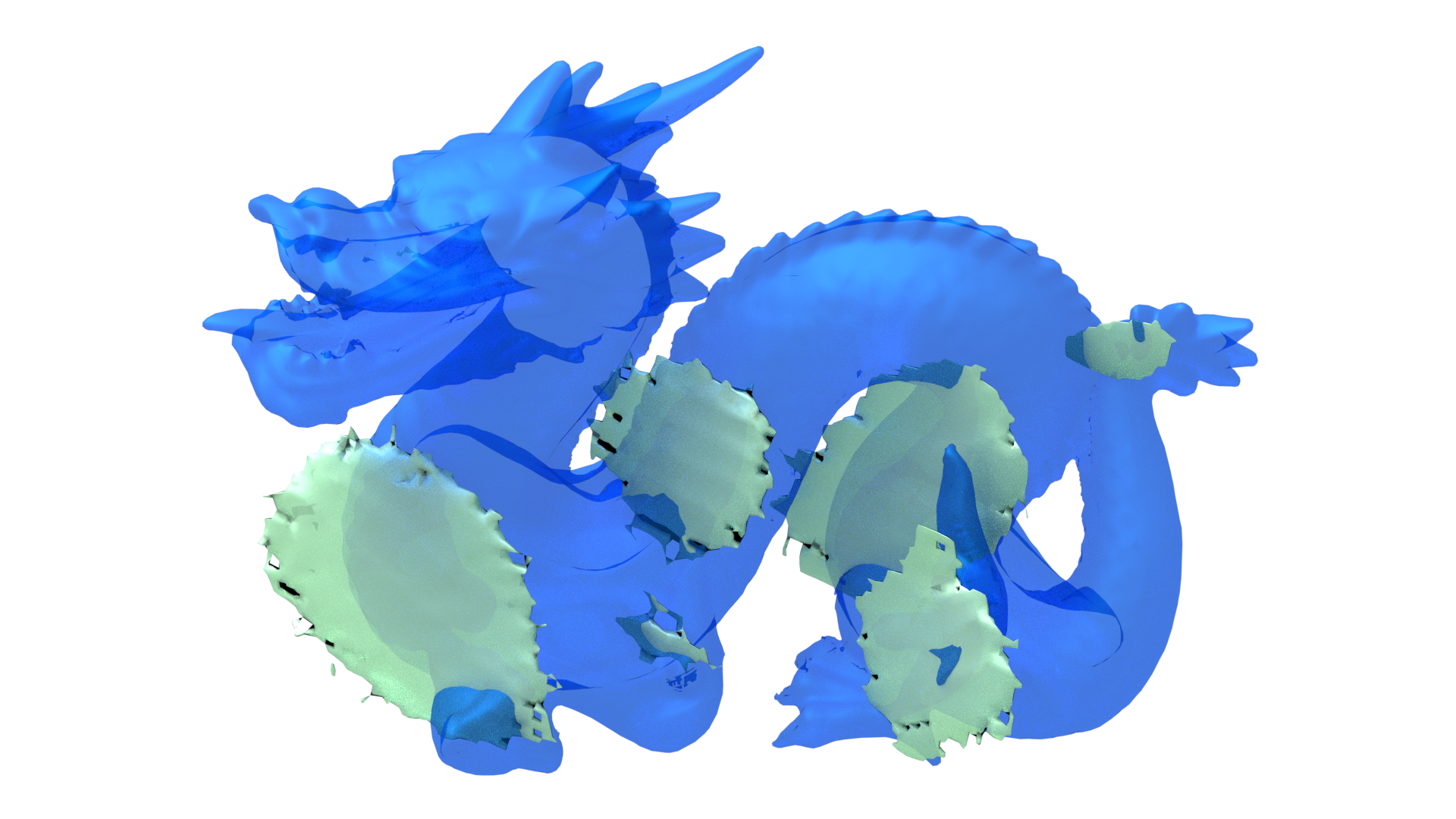

Zero-Level-Set Encoder for Neural Distance Fields

In: arXiv, 2023

In: arXiv, 2023

Neural shape representation generally refers to representing 3D geometry using neural networks, e.g., to compute a signed distance or occupancy value at a specific spatial position. Previous methods tend to rely on the auto-decoder paradigm, which often requires densely-sampled and accurate signed distances to be known during training and testing, as well as an additional optimization loop during inference. This introduces a lot of computational overhead, in addition to having to compute signed distances analytically, even during testing. In this paper, we present a novel encoder-decoder neural network for embedding 3D shapes in a single forward pass. Our architecture is based on a multi-scale hybrid system incorporating graph-based and voxel-based components, as well as a continuously differentiable decoder. Furthermore, the network is trained to solve the Eikonal equation and only requires knowledge of the zero-level set for training and inference. Additional volumetric samples can be generated on-the-fly, and incorporated in an unsupervised manner. This means that in contrast to most previous work, our network is able to output valid signed distance fields without explicit prior knowledge of non-zero distance values or shape occupancy. In other words, our network computes approximate solutions to the boundary-valued Eikonal equation. It also requires only a single forward pass during inference, instead of the common latent code optimization. We further propose a modification of the loss function in case that surface normals are not well defined, e.g., in the context of non-watertight surface-meshes and non-manifold geometry. We finally demonstrate the efficacy, generalizability and scalability of our method on datasets consisting of deforming 3D shapes, single class encoding and multiclass encoding, showcasing a wide range of possible applications.

Domain Adaptation with Morphologic Segmentation

In: Autonomous Driving: Perception, Prediction and Planning (ADP3) at IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021

In: Autonomous Driving: Perception, Prediction and Planning (ADP3) at IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021

We present a novel domain adaptation framework that uses morphologic segmentation to translate images from arbitrary input domains (real and synthetic) into a uniform output domain. Our framework is based on an established image-to-image translation pipeline that allows us to first transform the input image into a generalized representation that encodes morphology and semantics - the edge-plus-segmentation map (EPS) - which is then transformed into an output domain. Images transformed into the output domain are photo-realistic and free of artifacts that are commonly present across different real (e.g. lens flare, motion blur, etc.) and synthetic (e.g. unrealistic textures, simplified geometry, etc.) data sets. Our goal is to establish a preprocessing step that unifies data from multiple sources into a common representation that facilitates training downstream tasks in computer vision. This way, neural networks for existing tasks can be trained on a larger variety of training data, while they are also less affected by overfitting to specific data sets. We showcase the effectiveness of our approach by qualitatively and quantitatively evaluating our method on four data sets of simulated and real data of urban scenes.

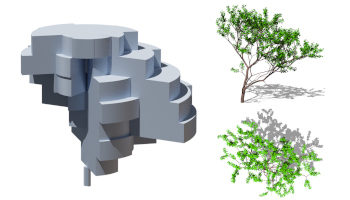

Learning to Reconstruct Botanical Trees from Single Images

In: ACM Transactions on Graphics (SIGGRAPH Asia), 2021

In: ACM Transactions on Graphics (SIGGRAPH Asia), 2021

We introduce a novel method for reconstructing the 3D geometry of botanical trees from single photographs. Faithfully reconstructing a tree from single-view sensor data is a challenging and open problem because many possible 3D trees exist that fit the tree's shape observed from a single view. We address this challenge by defining a reconstruction pipeline based on three neural networks. The networks simultaneously mask out trees in input photographs, identify a tree's species, and obtain its 3D radial bounding volume - our novel 3D representation for botanical trees. Radial bounding volumes (RBV) are used to orchestrate a procedural model primed on learned parameters to grow a tree that matches the main branching structure and the overall shape of the captured tree. While the RBV allows us to faithfully reconstruct the main branching structure, we use the procedural model's morphological constraints to generate realistic branching for the tree crown. This constraints the number of solutions of tree models for a given photograph of a tree. We show that our method reconstructs various tree species even when the trees are captured in front of complex backgrounds. Moreover, although our neural networks have been trained on synthetic data with data augmentation, we show that our pipeline performs well for real tree photographs. We evaluate the reconstructed geometries with several metrics, including leaf area index and maximum radial tree distances.

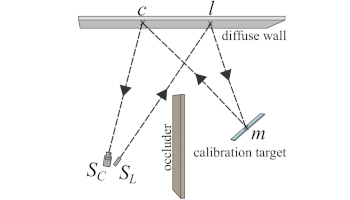

A Calibration Scheme for Non-Line-of-Sight Imaging Setups

In: Optics Express, 2020

In: Optics Express, 2020

The recent years have given rise to a large number of techniques for “looking around corners”, i.e., for reconstructing or tracking occluded objects from indirect light reflections off a wall. While the direct view of cameras is routinely calibrated in computer vision applications, the calibration of non-line-of-sight setups has so far relied on manual measurement of the most important dimensions (device positions, wall position and orientation, etc.). In this paper, we propose a method for calibrating time-of-flight-based non-line-of-sight imaging systems that relies on mirrors as known targets. A roughly determined initialization is refined in order to optimize for spatio-temporal consistency. Our system is general enough to be applicable to a variety of sensing scenarios ranging from single sources/detectors via scanning arrangements to large-scale arrays. It is robust towards bad initialization and the achieved accuracy is proportional to the depth resolution of the camera system.

Approaches to solve inverse problems for optical sensing around corners

In: SPIE Security + defense: Emerging Imaging and Sensing Technologies for Security and Defence IV, 2019

In: SPIE Security + defense: Emerging Imaging and Sensing Technologies for Security and Defence IV, 2019

Optically non-line-of-sight sensing or seeing around the corner is a computational imaging approach describing a classical inverse problem where information about a hidden scene has to be reconstructed from a set of indirect measurements. In the last decade, this field has been intensively studied by different groups, using several sensory and reconstruction approaches. We focus on active sensing with two main concepts: The reconstruction of reflective surfaces by back-projection of time-of-flight data and a six degree-of-freedom tracking of a rigid body from intensity images using an analysis-by-synthesis method. In the first case, the inverse problem can be approximately solved by back-projection of the transient data to obtain a geometrical shape of the hidden scene. Using intensity images, only the diffusely reflected blurred intensity distribution and no time information is recorded. This problem can be solved by an analysis-of-synthesis approach.

Inverse Procedural Modeling of Knitwear

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019

The analysis and modeling of cloth has received a lot of attention in recent years. While recent approaches are focused on woven cloth, we present a novel practical approach for the inference of more complex knitwear structures as well as the respective knitting instructions from only a single image without attached annotations. Knitwear is produced by repeating instances of the same pattern, consisting of grid-like arrangements of a small set of basic stitch types. Our framework addresses the identification and localization of the occurring stitch types, which is challenging due to huge appearance variations. The resulting coarsely localized stitch types are used to infer the underlying grid structure as well as for the extraction of the knitting instruction of pattern repeats, taking into account principles of Gestalt theory. Finally, the derived instructions allow the reproduction of the knitting structures, either as renderings or by actual knitting, as demonstrated in several examples.

A Quantitative Platform for Non-Line-of-Sight Imaging Problems

In: British Machine Vision Conference (BMVC), 2018

In: British Machine Vision Conference (BMVC), 2018

The computational sensing community has recently seen a surge of works on imaging beyond the direct line of sight. However, most of the reported results rely on drastically different measurement setups and algorithms, and are therefore hard to impossible to compare quantitatively. In this paper, we focus on an important class of approaches, namely those that aim to reconstruct scene properties from time-resolved optical impulse responses. We introduce a collection of reference data and quality metrics that are tailored to the most common use cases, and we define reconstruction challenges that we hope will aid the development and assessment of future methods.

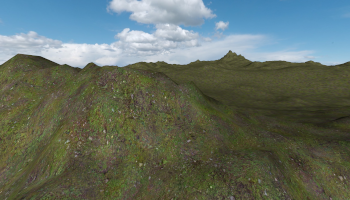

Multi-Scale Terrain Texturing using Generative Adversarial Networks

In: IEEE Conference on Image and Vision Computing New Zealand (IVCNZ), 2017

In: IEEE Conference on Image and Vision Computing New Zealand (IVCNZ), 2017

We propose a novel, automatic generation process for detail maps that allows the reduction of tiling artifacts in real-time terrain rendering. This is achieved by training a generative adversarial network (GAN) with a single input texture and subsequently using it to synthesize a huge texture spanning the whole terrain. The low-frequency components of the GAN output are extracted, down-scaled and combined with the high-frequency components of the input texture during rendering. This results in a terrain texture that is both highly detailed and non-repetitive, which eliminates the tiling artifacts without decreasing overall image quality. The rendering is efficient regarding both memory consumption and computational costs. Furthermore, it is orthogonal to other techniques for terrain texture improvements such as texture splatting and can directly be combined with them.

Wenn eine Wand kein Hindernis mehr ist

In: photonik, 2017

In: photonik, 2017

Ein noch sehr junges Teilgebiet der Computer-Vision beschäftigt sich mit der Lokalisierung und Verfolgung von Objekten, die sich nicht im direkten Sichtfeld einer Kamera befinden. Bisher waren dazu teure Spezialkameras und Laserbeleuchter mit einer hohen zeitlichen Auflösung erforderlich. Ein neuer Ansatz löst dieses Problem nun mit handelsüblicher Hardware.

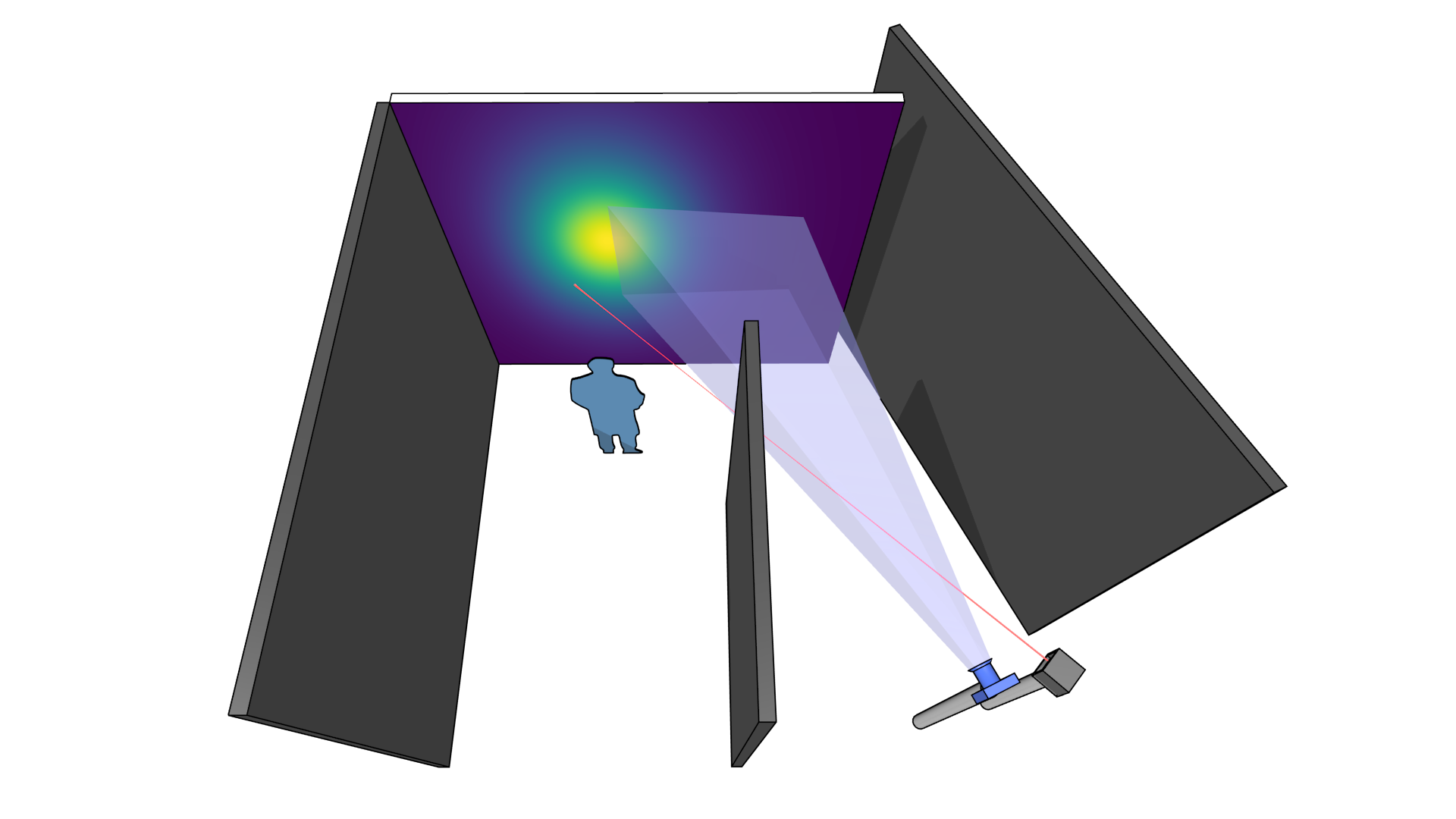

Non-line-of-sight MoCap

In: ACM SIGGRAPH Emerging Technologies, 2017

In: ACM SIGGRAPH Emerging Technologies, 2017

The sensing of objects hidden behind an occluder is a fascinating emerging area of research and expected to have an impact in numerous application fields such as automobile safety, remote observation or endoscopy. In the past, this problem has consistently been solved with the use of expensive time-of-flight technology and often required a long reconstruction time. Our system is the first one that only relies on off-the-shelf intensity cameras and lasers. To achieve this, we developed a novel analysis-by-synthesis scheme which utilizes the output of a specialized renderer as input to an optimizer to perform the reconstruction. In the exhibition, visitors can freely move the object around in the hidden scene, while our camera setup on the other side of the wall reconstructs the object position and orientation in real time. We hope that this first hand experience will spread excitement about possible future applications of this new technology.

Transient light imaging laser radar with advanced sensing capabilities: reconstruction of arbitrary light in flight path and sensing around a corner

In: SPIE Laser Radar Technology and Applications, 2017

In: SPIE Laser Radar Technology and Applications, 2017

Laser radars are widely used for laser detection and ranging in a wide field of application scenarios. In the recent past, new sensing capabilities by transient light imaging have been demonstrated enabling imaging of light pulses during flight and the estimation of position and shape of targets outside the direct field of view. High sensitive imaging sensors are available give the chance to detect laser pulse in flight which are sparsely scattered in air. Theory and experimental investigation of arbitrary light propagation paths show the possibility to reconstruct the light path trajectory. This result could enable the remote localization of laser sources which will become an important reconnaissance task in future scenarios. Further, transient light imaging can reveal information about objects outside the direct field of view or hidden behind an obscuration by computational imaging. In this talk, we present different approaches to realize transient light imaging (e.g. time correlated single photon counting) and their application for relativistic imaging, real-time non-line of sight tracking and reconstruction of hidden scenes.

Dual-mode optical sensing: three-dimensional imaging and seeing around a corner

In: SPIE Optical Engineering, 2017

In: SPIE Optical Engineering, 2017

The application of nonline-of-sight (NLoS) vision and seeing around a corner has been demonstrated in the recent past on a laboratory level with round trip path lengths on the scale of 1 m as well as 10 m. This method uses a computational imaging approach to analyze the scattered information of objects which are hidden from the sensor’s direct field of view. A detailed knowledge about the scattering surfaces is necessary for the analysis. The authors evaluate the realization of dual-mode concepts with the aim of collecting all necessary information to enable both the direct three-dimensional imaging of a scene as well as the indirect sensing on hidden objects. Two different sensing approaches, laser gated viewing (LGV) and time-correlated single-photon counting, are investigated operating at laser wavelengths of 532 and 1545 nm, respectively. While LGV sensors have high spatial resolution, their application for NLoS sensing suffers from a low temporal resolution, i.e., a minimal gate width of 2 ns. On the other hand, Geiger-mode single-photon counting devices have high temporal resolution (250 ps), but the array size is limited to some thousand sensor elements. The authors present detailed theoretical and experimental evaluations of both sensing approaches.

Transient Imaging for Real-Time Tracking Around a Corner

In: SPIE Electro-Optical Remote Sensing, 2016

In: SPIE Electro-Optical Remote Sensing, 2016

Non-line-of-sight imaging is a fascinating emerging area of research and expected to have an impact in numerous application fields including civilian and military sensing. Performance of human perception and situational awareness can be extended by the sensing of shapes and movement around a corner in future scenarios. Rather than seeing through obstacles directly, non-line-of-sight imaging relies on analyzing indirect reflections of light that traveled around the obstacle. In previous work, transient imaging was established as the key mechanic to enable the extraction of useful information from such reflections. So far, a number of different approaches based on transient imaging have been proposed, with back projection being the most prominent one. Different hardware setups were used for the acquisition of the required data, however all of them have severe drawbacks such as limited image quality, long capture time or very high prices. In this paper we propose the analysis of synthetic transient renderings to gain more insights into the transient light transport. With this simulated data, we are no longer bound to the imperfect data of real systems and gain more flexibility and control over the analysis. In a second part, we use the insights of our analysis to formulate a novel reconstruction algorithm. It uses an adapted light simulation to formulate an inverse problem which is solved in an analysis-by-synthesis fashion. Through rigorous optimization of the reconstruction, it then becomes possible to track known objects outside the line of side in real time. Due to the forward formulation of the light transport, the algorithm is easily expandable to more general scenarios or different hardware setups. We therefore expect it to become a viable alternative to the classic back projection approach in the future.

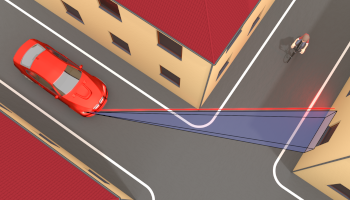

Tracking objects outside the line of sight using 2D intensity images

In: Scientific Reports, 2016

In: Scientific Reports, 2016

The observation of objects located in inaccessible regions is a recurring challenge in a wide variety of important applications. Recent work has shown that using rare and expensive optical setups, indirect diffuse light reflections can be used to reconstruct objects and two-dimensional (2D) patterns around a corner. Here we show that occluded objects can be tracked in real time using much simpler means, namely a standard 2D camera and a laser pointer. Our method fundamentally differs from previous solutions by approaching the problem in an analysis-by-synthesis sense. By repeatedly simulating light transport through the scene, we determine the set of object parameters that most closely fits the measured intensity distribution. We experimentally demonstrate that this approach is capable of following the translation of unknown objects, and translation and orientation of a known object, in real time.

Sensing and reconstruction of arbitrary light-in-flight paths by a relativistic imaging approach

In: SPIE, 2016

In: SPIE, 2016

Transient light imaging is an emerging technology and interesting sensing approach for fundamental multidisciplinary research ranging from computer science to remote sensing. Recent developments in sensor technologies and computational imaging has made this emerging sensing approach a candidate for next generation sensor systems with rapidly increasing maturity but still relay on laboratory technology demonstrations. At ISL, transient light sensing is investigated by time correlated single photon counting (TCSPC). An eye-safe shortwave infrared (SWIR) TCSPC setup, consisting of an avalanche photodiode array and a pulsed fiber laser source, is used to investigate sparsely scattered light while propagating through air. Fundamental investigation of light in flight are carried out with the aim to reconstruct the propagation path of arbitrary light paths. Light pulses are observed in flight at various propagation angles and distances. As demonstrated, arbitrary light paths can be distinguished due to a relativistic effect leading to a distortion of temporal signatures. A novel method analyzing the time difference of arrival (TDOA) is carried out to determine the propagation angle and distance with respect to this relativistic effect. Based on our results, the performance of future laser warning receivers can be improved by the use of single photon counting imaging devices. They can detect laser light even when the laser does not directly hit the sensor or is passing at a certain distance.

Relativistic effects in imaging of light in flight with arbitrary paths

In: Optics Letters, 2016

In: Optics Letters, 2016

Direct observation of light in flight is enabled by recent avalanche photodiode arrays, which have the capability for time-correlated single photon counting. In contrast to classical imaging, imaging of light in flight depends on the relative sensor position, which is studied in detail by measurement and analysis of light pulses propagating at different angles. The time differences of arrival are analyzed to determine the propagation angle and distance of arbitrary light paths. Further analysis of the apparent velocity shows that light pulses can appear to travel at superluminal or subluminal apparent velocities.

Material Classification Using Raw Time-of-Flight Measurements

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016

We propose a material classification method using raw time-of-flight (ToF) measurements. ToF cameras capture the correlation between a reference signal and the temporal response of material to incident illumination. Such measurements encode unique signatures of the material, i.e. the degree of subsurface scattering inside a volume. Subsequently, it offers an orthogonal domain of feature representation compared to conventional spatial and angular reflectance-based approaches. We demonstrate the effectiveness, robustness, and efficiency of our method through experiments and comparisons of real-world materials.

Study of single photon counting for non-line-of-sight vision

In: SPIE, 2015

In: SPIE, 2015

The application of non-line-of-sight vision has been demonstrated in the recent past on laboratory level with round trip path lengths on the scale of 1 m as well as 10 m. This method uses a computational imaging approach to analyze the scattered information of objects which are hidden from the direct sensor field of view. In the present work, the authors evaluate the application of recent single photon counting devices for non-line-of-sight sensing and give predictions on range and resolution. Further, the realization of a concept is studied enabling the indirect view on a hidden scene. Different approaches based on ICCD and GM-APD or SPAD sensor technologies are reviewed. Recent laser gated viewing sensors have a minimal temporal resolution of around 2 ns due to sensor gate widths. Single photon counting devices have higher sensitivity and higher temporal resolution.

Multiple-return single-photon counting of light in flight and sensing of non-line-of-sight objects at shortwave infrared wavelengths

In: Optics Letters, 2015

In: Optics Letters, 2015

Time-of-flight sensing with single-photon sensitivity enables new approaches for the localization of objects outside a sensor's field of view by analyzing backscattered photons. In this Letter, the authors have studied the application of Geiger-mode avalanche photodiode arrays and eye-safe infrared lasers, and provide experimental data of the direct visualization of backscattering light in flight, and direct vision and indirect vision of targets in line-of-sight and non-line-of-sight configurations at shortwave infrared wavelengths.

Solving Trigonometric Moment Problems for Fast Transient Imaging

In: ACM Transactions on Graphics (SIGGRAPH Asia), 2015

In: ACM Transactions on Graphics (SIGGRAPH Asia), 2015

Transient images help to analyze light transport in scenes. Besides two spatial dimensions, they are resolved in time of flight. Cost-efficient approaches for their capture use amplitude modulated continuous wave lidar systems but typically take more than a minute of capture time. We propose new techniques for measurement and reconstruction of transient images, which drastically reduce this capture time. To this end, we pose the problem of reconstruction as a trigonometric moment problem. A vast body of mathematical literature provides powerful solutions to such problems. In particular, the maximum entropy spectral estimate and the Pisarenko estimate provide two closed-form solutions for reconstruction using continuous densities or sparse distributions, respectively. Both methods can separate m distinct returns using measurements at m modulation frequencies. For m = 3 our experiments with measured data confirm this. Our GPU-accelerated implementation can reconstruct more than 100000 frames of a transient image per second. Additionally, we propose modifications of the capture routine to achieve the required sinusoidal modulation without increasing the capture time. This allows us to capture up to 18.6 transient images per second, leading to transient video. An important byproduct is a method for removal of multipath interference in range imaging.