Multi-Scale Terrain Texturing using Generative Adversarial Networks

Jonathan Klein, Stefan Hartmann, Michael Weinmann, and Dominik L. Michels

Abstract

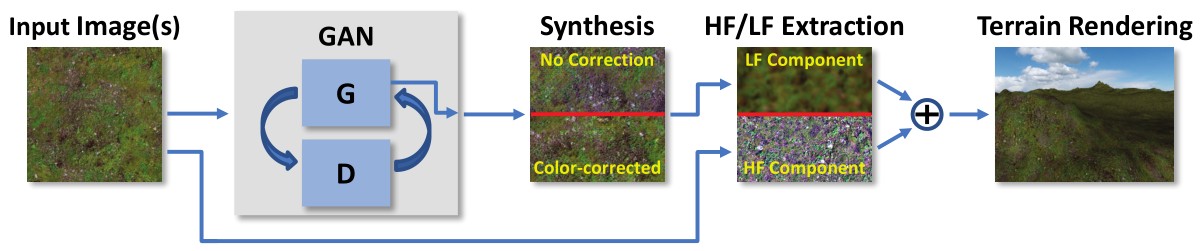

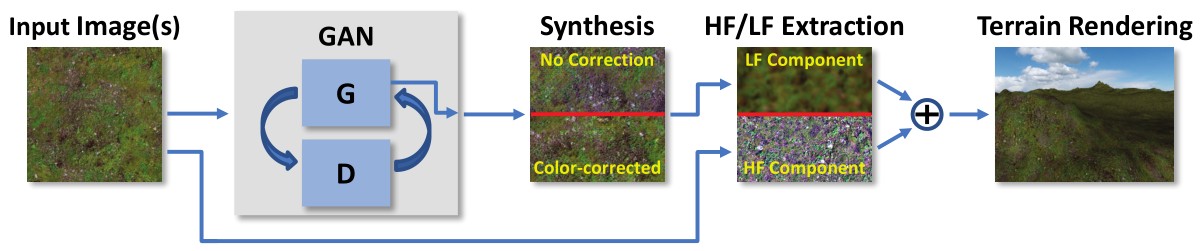

We propose a novel, automatic generation process for detail maps that allows the reduction of tiling artifacts in real-time terrain rendering. This is achieved by training a generative adversarial network (GAN) with a single input texture and subsequently using it to synthesize a huge texture spanning the whole terrain. The low-frequency components of the GAN output are extracted, down-scaled and combined with the high-frequency components of the input texture during rendering. This results in a terrain texture that is both highly detailed and non-repetitive, which eliminates the tiling artifacts without decreasing overall image quality. The rendering is efficient regarding both memory consumption and computational costs. Furthermore, it is orthogonal to other techniques for terrain texture improvements such as texture splatting and can directly be combined with them.

Paper

In: IEEE Conference on Image and Vision Computing New Zealand (IVCNZ), 2017

Download:

Demo

Download (~60 MB)

This Demo shows the result of our method in a real-time application. The user is free to move the camera across the terrain and explore the tiling effects from various angles and distances. The screenshots in the paper were created using this demo.

The demo contains Windows binaries, but the included source code can be compiled on other platforms, too.